K3s API Server Audit Logging

Logging must be configured first to collect telemetry on the K3s Control host. Detailed configuration for setting up API Server logging is provided at https://docs.k3s.io/security/hardening-guide#api-server-audit-configuration.

A logging directory must be created first.

mkdir -p -m 744 /var/lib/rancher/k3s/server/logs

Next, a default audit policy, audit.yaml should be created in /var/lib/rancher/k3s/server

A simple policy manifests where only metadata is logged will look like this:

apiVersion: audit.k8s.io/v1

kind: Policy

rules:

- level: Metadata

Additional server options are added to the k3s systemd service file.

...

ExecStart=/usr/local/bin/k3s \

server \

...

'--kube-apiserver-arg=audit-log-path=/var/lib/rancher/k3s/server/logs/audit.log' \

'--kube-apiserver-arg=audit-policy-file=/var/lib/rancher/k3s/server/audit.yaml' \

We should see an audit.log file in /var/lib/rancher/k3s/server/logs/ once the K3s Server service is restarted.

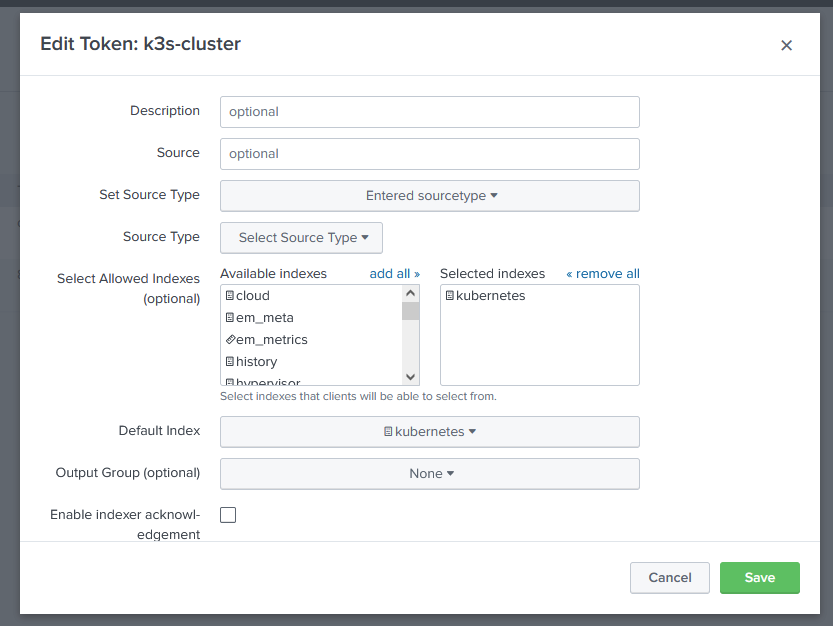

Collect Logs With Splunk OTEL

We are now ready to deploy an updated Splunk OTEL Collector configuration for monitoring the K3s server audit log. This is done by adding extraFileLogs and agent stanzas to the my_customized_values.yaml file used to initially deploy the splunk-otel-collector

logsCollection:

...

extraFileLogs:

filelog/audit-log:

include: [/var/lib/rancher/k3s/server/logs/audit.log]

start_at: beginning

include_file_path: true

include_file_name: false

resource:

com.splunk.source: /var/lib/rancher/k3s/server/logs/audit.log

host.name: 'EXPR(env("K8S_NODE_NAME"))'

com.splunk.sourcetype: kube:apiserver-audit

agent:

extraVolumeMounts:

- name: audit-log

mountPath: /var/lib/rancher/k3s/server/logs

extraVolumes:

- name: audit-log

hostPath:

path: /var/lib/rancher/k3s/server/logs

Let's review the important parts of this manifest. First, extraFileLogs defines a new set of logs in the DaemonSet's container to collect through include directive. The resource directive is used to set the various internal Splunk related values for host, source, and sourcetype.

Next, the agent sections configures the DaemonSet to mount the the K3s server logs directory inside the container running the OTEL collector. agent.extraVolumes.hostPath points to the logging path on the Control Node.

Then we can run helm to apply the new values configuration.

helm upgrade -n infra splunk-otel-collector splunk-otel-collector-chart/splunk-otel-collector -f my_customized_values.yaml