Logging K3s Telemetry to Splunk

In a Kubernetes cluster, the container logs are kept locally on the worker node where the container is running. To successfully log this information to a central locations, we must collect these log files from each node.

One method is to use a node-level logging agent configured as a DaemonSet with access to the log directory. A DaemonSet are Pods that run on every node in the cluster. This provides the perfect solution for when you want the same tooling deployed across the cluster. Using a DaemonSet allows us to easily collect container logs from Pods on each worker node. In this configuration, I will be using Splunk's distribution of OpenTelemetry (OTEL) Collector to send logs to a centralized Splunk index.

Deploying Splunk OTEL Collector as a DaemonSet on K3s is straight forward using a Helm chart to configure the application. Details can be found on https://github.com/signalfx/splunk-otel-collector-chart.

helm install splunk-otel-collector --values my_customized_values.yaml splunk-otel-collector-chart/splunk-otel-collector

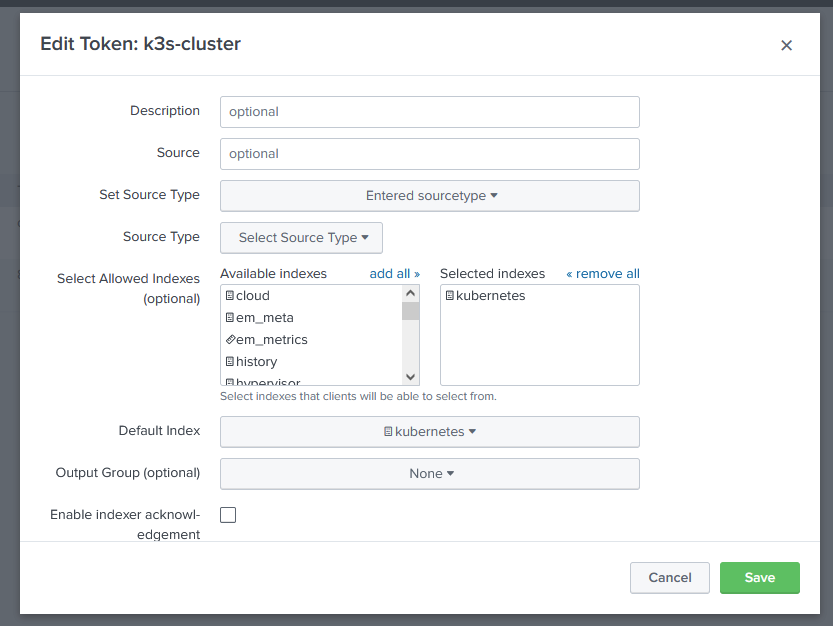

Make sure to setup a HTTP Event Collector (HEC) input on your Splunk instance first. More information on HEC setup can be found in Splunk's documentation: https://docs.splunk.com/Documentation/Splunk/9.0.4/Data/UsetheHTTPEventCollector.

Now, the interesting configurations happen in the my_customized_values.yaml. The endpoint parameter points to your Splunk indexer.

clusterName: "k3s-cluster"

splunkPlatform:

endpoint: "https://10.0.90.102:8088/services/collector"

token: "YOUR_HEC_TOKEN"

index: "kubernetes"

insecureSkipVerify: true

logsEngine: otel

tolerations:

- key: CriticalAddonsOnly

operator: Exists

logsCollection:

containers:

# Set useSplunkIncludeAnnotation flag to `true` to collect logs from pods with `splunk.com/include: true` annotation and ignore others.

# All other logs will be ignored.

useSplunkIncludeAnnotation: true

Tolerations are configured to allow the DaemonSet to run on the K3s Control node. This allows us to collect log from those containers running on the control plane, including Traefik, and later, audit logs from the API Server. We configure the logsCollection.containers section with useSplunkIncludeAnnotation: true to have more control over which logs are collected. This setting configures the OTEL collector to look for a specific resource annotation for logs to include in collection. By default the Splunk OTEL Collector collects all container logs unless an exclude annotation is found on a resource.

For the most part, I've taken to applying the Splunk Include annotation to namespaces, allowing me to collect logging from pods running application workloads. This allows me slowly ramp up log collection across the cluster depending on requirements need for monitoring.

Create a patch file to be applied:

# Deployment Template spec

spec:

template:

metadata:

annotations:

splunk.com/include: "true"

Then apply the patch the Namespace, Deployment or StatefulSet you would like to include in logging, for example, Traefik.

kubectl -n kube-system patch deployments.apps traefik --patch-file splunk-otel-patch.yaml

Traefik logs should now be flowing into the kubernetes index in Splunk.